The first post in this series on visual field construction discussed eye motion and the problem of creating a stable visual field. A solution using photo-stitching was presented and shown to yield visually pleasing results. Stitching, however, is an after-the-fact remedy, a post-processing of visual input deriving results when the image in question might have already come and gone.

This post proposes a second method of stabilization relying on “motion feed forward”. When our brain decides to move our body a complex series of neuronal messages goes out to all parts, to the feet, the torso, the head and elsewhere to effect that move. It would make sense for the visual system to siphon off some of these commands and prep the visual field for an incoming adjustment, e.g. the head is moving a centimeter to the right therefore expect the current image to move to the left. Anticipating such changes in advance has to help the visual cortex sort out what’s coming into the retina.

We know, in fact, that when this information link is broken or in error we feel nauseous and sometimes really sick. Ask anyone with motion sickness about it.

For the Eye we simulate this condition by feeding information about the stepper-motor movement into the visual field construction code. If we feed the stepper motor a command to move 5 degrees to the left we can compute a new perspective transformation that will compensate for this change based on knowledge of the sensor size and the new position.

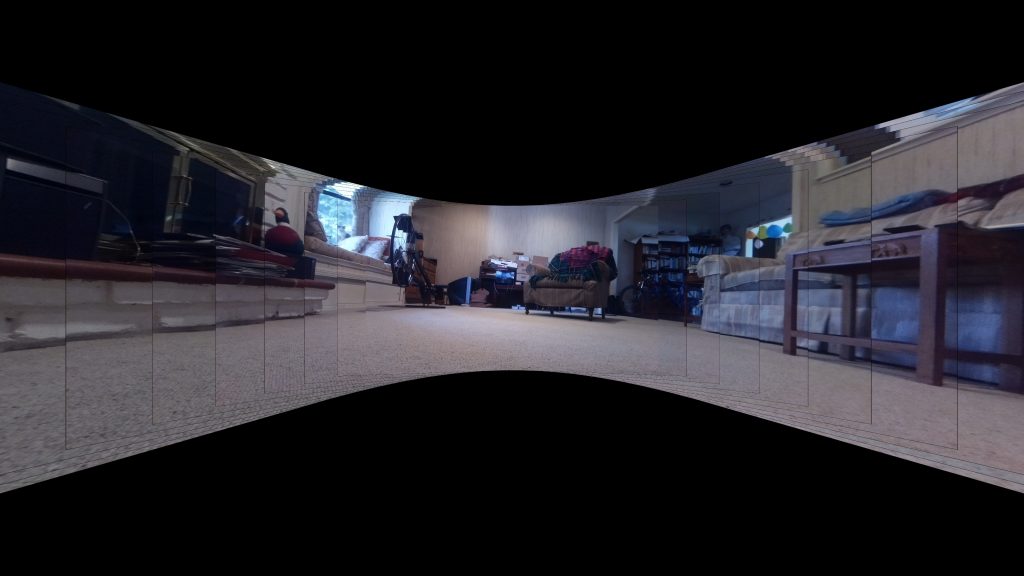

This is an example of a visual field scan constructed using a motion feed forward algorithm. More details will be provided as soon as time permits.